AMD Instinct MI250 Sees Boosted AI Performance With PyTorch 2.0 & ROCm 5.4, Closes In On NVIDIA GPUs In LLMs

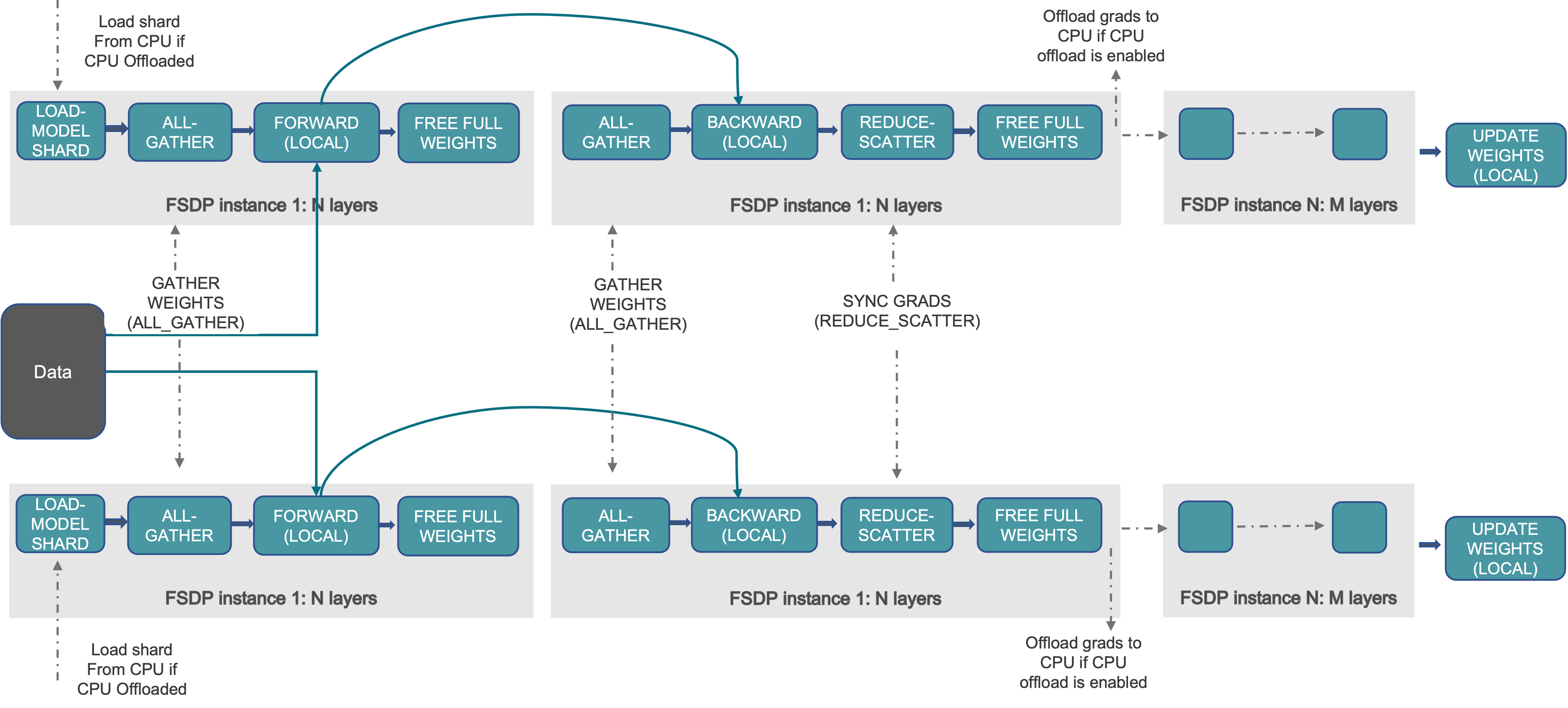

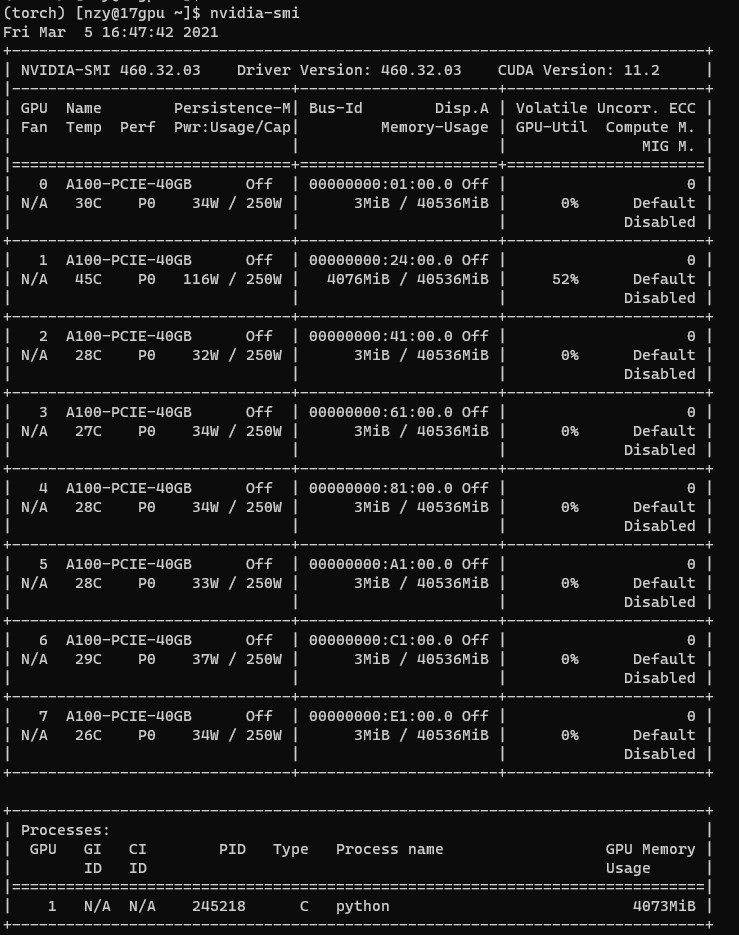

Data copy between GPUs failed.(Tesla A100, cuda11.1, cudnn8.1.0,pytorch1.8) - distributed - PyTorch Forums

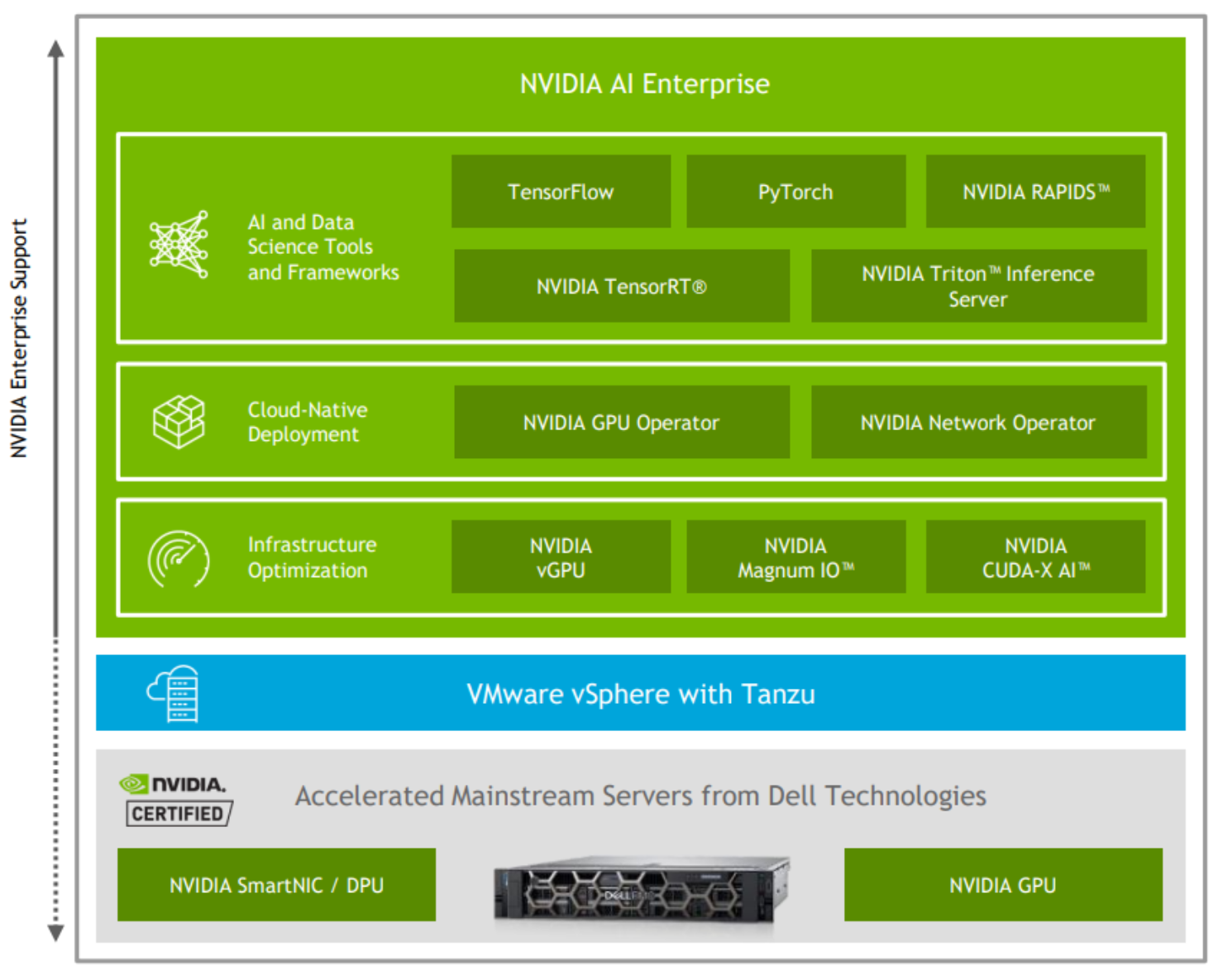

NVIDIA | White Paper - Virtualizing GPUs for AI with VMware and NVIDIA Based on Dell Infrastructure | Dell Technologies Info Hub

Torch.cudaa.device_count() shows only one gpu in MIG A100 · Issue #102715 · pytorch/pytorch · GitHub